SEO Factors from Leaked Google Search API Documents

In a recent groundbreaking revelation, thousands of internal Google Search API documents have been leaked, providing unprecedented insights into the search engine’s ranking mechanisms.

The leaks, sourced from anonymous insiders (from Google’s Search division and confirmed as authentic by ex-Google employees) and detailed in articles by SparkToro and iPullRank, uncover how Google utilises clickstream data, Chrome browser data, and a myriad of ranking factors like site authority, user interaction (NavBoost), and quality raters’ feedback.

This article delves into these crucial ranking factors, highlighting key aspects SEO professionals need to understand and put on their strategic radar in light of these revelations.

Important Ranking Factors SEOs Should See

As it turns out, many of the public statements made by Google’s search liaisons over the years completely contradict what’s being revealed in these leaked documents. Some instances include: Google’s persistent denial that click-based user signals are used as ranking signals, denial that subdomains are assessed individually, denial of a sandbox for fresh websites, denial that domain age matters, and more.

There’s a lot of background to this unfolding story. If you wish to read more about what exactly went down straight from the horse’s mouth, along with super-specific technical nitty-gritty, we highly recommend reading Rand Fishkin’s post on SparkToro and Mike King’s piece on iPullRank.

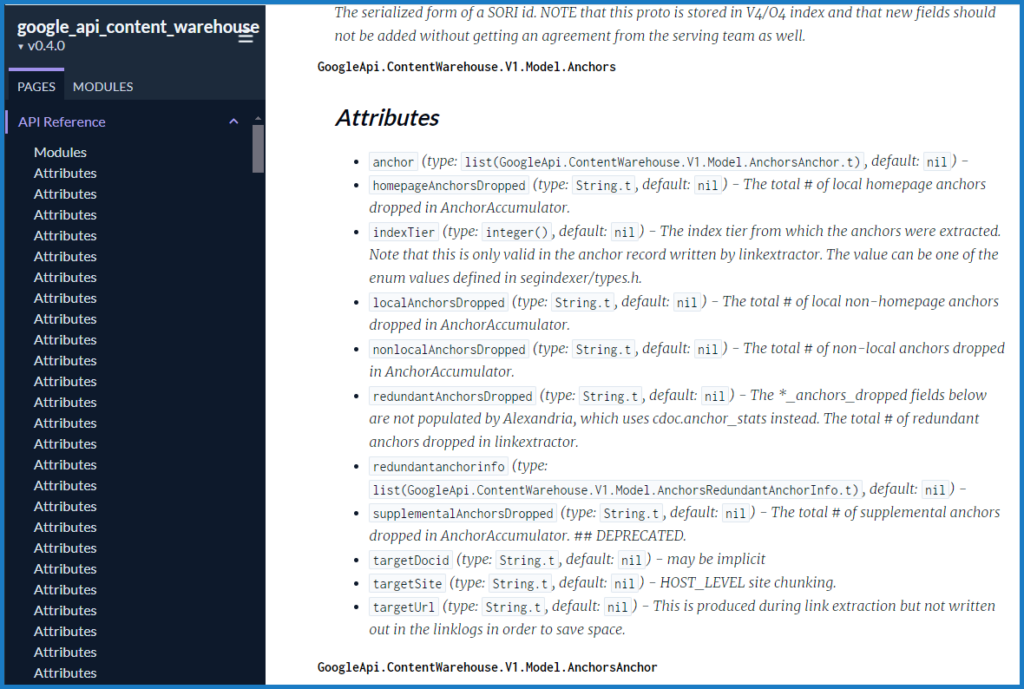

During Rand’s call with Erfan Azimi (the anonymous source), an SEO practitioner and the founder of a digital agency, Erfan showed him the leak: over 2,500 pages of API documentation containing 14,014 attributes (API features) that seem to come from Google’s internal “Content API Warehouse.” Based on the document’s commit history, this code was uploaded to GitHub on Mar 27, 2024, and not brought down until May 7, 2024.

This documentation doesn’t show things like the weight of particular ranking factors in the algorithm, nor does it establish which elements are actually used in rankings. However, it does provide extreme details about the data Google collects and very likely uses for ranking sites.

Now, as for the scope of this piece, without further ado, let’s dive into the most important ranking factors that we’ve derived from these recent developments.

1. Site Authority

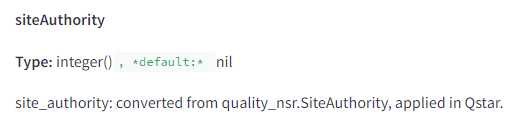

Leaked documents confirm Google’s use of a “siteAuthority” metric, which assesses the overall trust and authority of a website. This contrasts with Google’s public denial of using domain authority, suggesting that higher authority sites generally perform better in search rankings.

The concept of site authority underscores a pivotal ranking factor in Google’s algorithm. Site authority, akin to the widely discussed but publicly denied “domain authority” (a proprietary metric by Moz) gauges the overall trustworthiness and credibility of a website. Factors contributing to site authority include the quality and relevance of content, backlink profile, and the site’s historical performance.

The higher the site authority, the more likely a website will rank well in search results. This measure is integral to Google’s efforts to prioritise high-quality, reliable sources, ensuring users receive trustworthy information.

For instance, a site with extensive, well-regarded content and a strong backlink profile from reputable sources will score higher in site authority than a newer or less established site. This approach helps Google mitigate the spread of misinformation by favouring sites that have proven their reliability over time.

While the exact metrics and algorithms behind site authority remain proprietary, you should focus on building high-quality content, acquiring reputable backlinks, and maintaining a consistent, positive user experience to enhance your site’s authority and, consequently, its search engine rankings.

2. Click Data and NavBoost

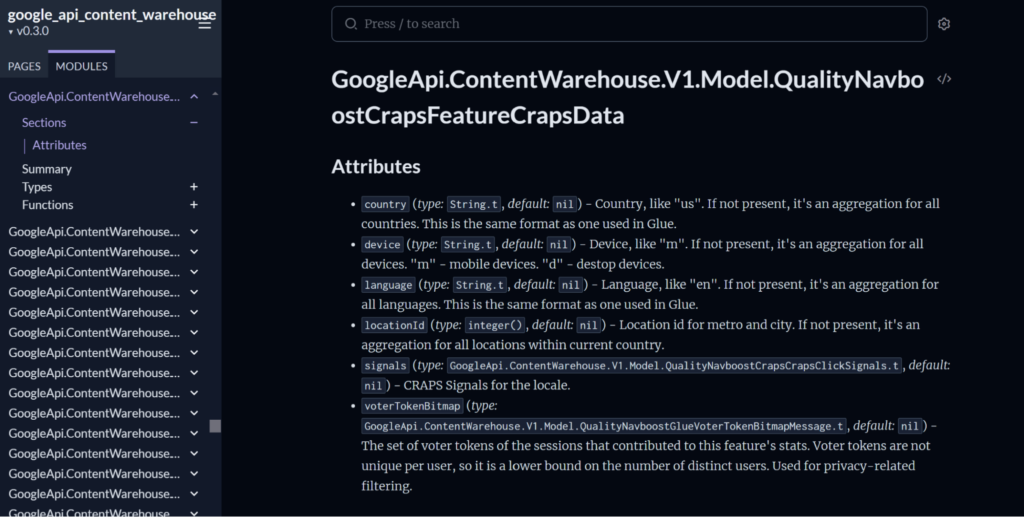

The leaked documents point to the fact that user interaction, especially click data, plays a significant role in ranking. The NavBoost system utilises clickstream data to rank pages based on user behaviour, rewarding sites with higher engagement.

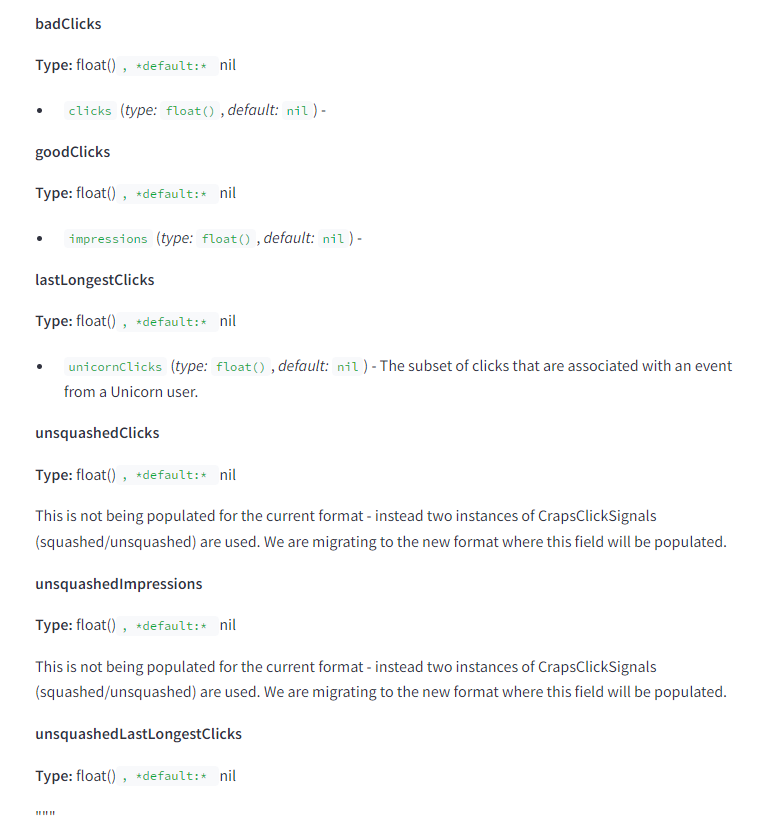

Specifically, the documentation mentions features like “goodClicks”, “badClicks”, and “lastLongestClicks”, which are tied to NavBoost and Glue, referenced in Google’s DOJ trial.

Essentially, this data encompasses user interactions with search results, such as click-through rates (CTR), bounce rates, and dwell time on a page.

Google’s NavBoost system specifically leverages click data to adjust rankings based on user behaviour. Pages with higher engagement are seen as more relevant and are thus rewarded with better rankings. This approach aims to enhance user satisfaction by prioritising content that effectively meets user intent and retains user interest.

For us as SEO professionals, optimising for click data involves creating compelling meta descriptions and titles to improve CTR, ensuring engaging and relevant content to reduce bounce rates, and enhancing page experience to increase dwell time. These strategies collectively signal to Google that the content is valuable and relevant, which can lead to higher search rankings.

3. Sandbox Effect

The sandbox effect, as revealed in the leaked Google documents, is a phenomenon where new websites experience a temporary suppression in search rankings, despite having quality content and good SEO practices. This period is often referred to as the “sandbox” because new sites are placed in a sort of holding area until they have proven their reliability and trustworthiness over time. In other words, domain age matters when it comes to ranking on Google.

This is managed through the “hostAge” attribute, affecting how quickly new sites can climb in rankings. It considers the age of the domain and the website. Google uses this period to ensure that new sites are not spammy and can provide consistent, valuable content to users.

As you can guess, there isn’t much you can do about this. After all, good things take time. You need to be patient and focus on consistently providing high-quality content. Also, during this period, it helps to build the site’s authority through quality backlinks. Track performance metrics and continue optimising the site, understanding that improvements in ranking may take time due to the sandbox effect.

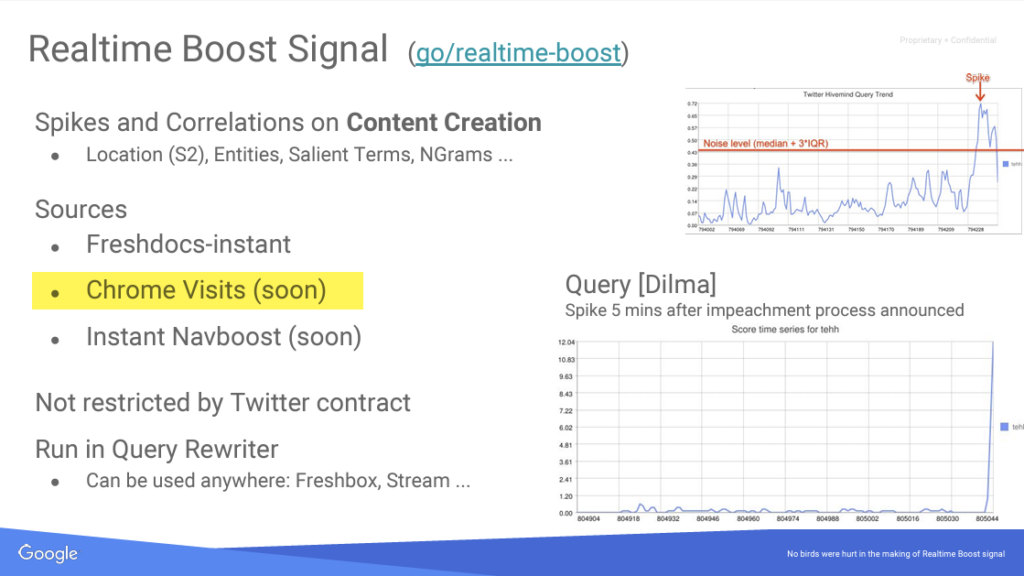

4. Chrome Data

The leaked Google documents reveal that data collected from the Chrome browser plays a role in search rankings. Despite previous denials — Matt Cutts has been quoted as saying Google does not use Chrome data for rankings, and recently John Mueller supported that claim — it’s clear that site-level views data from Chrome is used to refine and improve Google’s algorithms.

As per the documents, Chrome tracks user interactions such as time spent on a page, clicks, and browsing patterns. This data helps Google understand user preferences and behaviour, allowing them to rank pages based on real-world usage. Metrics such as bounce rate, session duration, and scroll depth collected via Chrome provide valuable insights into the user experience and content quality.

Notably, another metric called “topURL” i.e. the top page clicked according to Chrome data is also used to determine rankings.

By incorporating Chrome data, Google can better assess the relevance and quality of a webpage, ensuring that high-engagement pages are rewarded with better rankings.

So, what should you do with this info? Well, focus on enhancing user experience by improving page load times, navigation, and content engagement. Craft interactive content, clear calls-to-action, and visually appealing design. Use tools like Hotjar to monitor and analyse user behaviour on your website and identify areas for improvement.

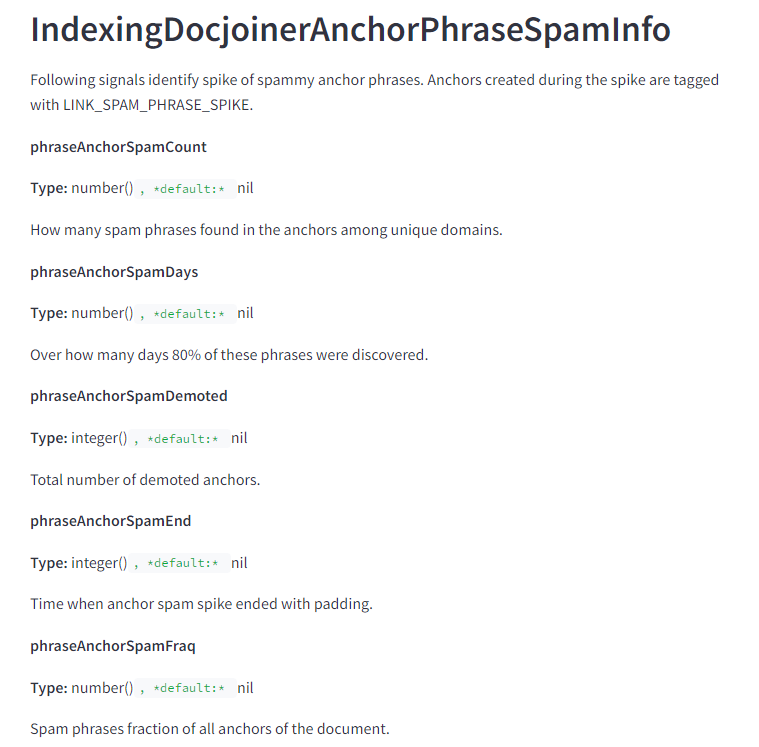

5. Links and Anchor Text

Not surprisingly, links remain a vital ranking factor, with both the quantity and quality of backlinks influencing a site’s authority and ranking. High-quality links from reputable sites can significantly boost a site’s search performance.

The leaked documents highlight several aspects of how links influence rankings:

- Quality Over Quantity: High-quality backlinks from authoritative and relevant sites significantly boost a website’s ranking. Google prioritises links that come from credible sources over a large number of low-quality links.

- Contextual Relevance: Links embedded within relevant, high-quality content carry more weight than those in unrelated or low-quality contexts.

- Anchor Text: The clickable text of a link, or anchor text, provides context about the linked page’s content. Descriptive and relevant anchor text can improve a page’s relevance signals and help it rank better for specific keywords.

Furthermore, in terms of the anchor text, including target keywords can enhance a page’s relevance for those terms. However, over-optimization or excessive use of exact-match keywords can lead to penalties. Anchor text should be naturally integrated within the content, providing a seamless reading experience without appearing forced or spammy. Use a mix of exact-match, partial-match, branded, and generic anchor text to create a natural link profile.

The best way to build links is to produce high-quality, valuable content that naturally attracts backlinks from other reputable sites. The next best way is to engage in outreach campaigns to build relationships with industry influencers and websites to earn backlinks via valuable content contributions such as guest blogs. Also, never discount the power of using internal links to distribute link equity throughout your site, helping to boost the ranking of key pages.

6. Content

Everything you know about the importance of quality content in SEO stays true, as you’d expect.

However, there are some nuances derived from this leak that would help shape your future SEO content strategy:

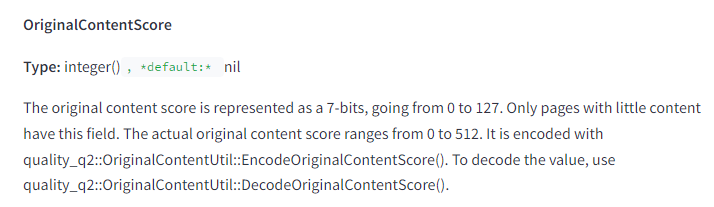

- Short content might be as good as long-form content provided it shares original and valuable insights. The “OriginalContentScore” metric suggests that short content is scored for its originality. Thus, thin content is not always a problem of length.

- Google counts the number of tokens and the ratio of total words in the body to the number of unique tokens. The docs suggest there is a maximum number of tokens that can be considered for a document, thus reinforcing that you should place your most important content early.

- Font size matters. Google is tracking the average weighted font size of terms in documents. The same goes for the anchor text of links.

7. Onsite Prominence

“OnSiteProminence” is a metric that assesses the importance of a document within a website. It is calculated by simulating traffic flow from the homepage and high-traffic pages (referred to as “high craps click pages”).

Essentially, it evaluates internal link scores, determining how prominently a page is featured within the site’s internal linking structure. So, the more links a page receives from important pages (like the homepage), the higher its OnSiteProminence score. Google uses simulated traffic to measure how often users might navigate to a page from key entry points on the site.

What does this mean for SEO? Ensure key pages link to important content within your site to boost their prominence. Include links to significant pages from your homepage and other high-traffic pages to improve their scores. Make it easy for users to find and navigate to crucial pages from multiple points within your site.

By optimising the internal linking structure and strategically linking important pages, you can enhance the OnSiteProminence score, thereby improving their visibility and ranking potential within Google’s search results.

8. Page Update

A key takeaway from the leaked documents is that irregularly updated content has the lowest storage priority for Google and is unlikely to appear in search results for freshness-related queries.

By storage priority, we mean that Google uses:

- Flash Drives: For the most important and regularly updated content.

- Solid State Drives: For less important content.

- Standard Hard Drives: For irregularly updated content.

So, it’s crucial to regularly update your content. Enhance it with unique information, new images, videos, etc. Besides, Google has something called “pageQuality” and is using an LLM to estimate “effort” for article pages. This could be a metric that is useful for Google in figuring out whether a page could be replicated easily. Again, unique images, videos, embedded tools, and depth of content can help you score high on this metric.

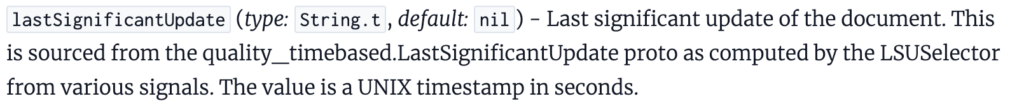

Furthermore, according to the leak, Google maintains a record of every version of a web page, effectively creating an internal Web Archive. However, Google only utilises the last 20 versions of a document. By updating a page, allowing it to be crawled, and repeating this process a few times, you can push out older versions. Given that historical versions carry different weights and scores, this information can be useful. The documentation distinguishes between “Significant Update” and “Update,” though it’s unclear if significant updates are necessary for this version management strategy.

9. Page Title

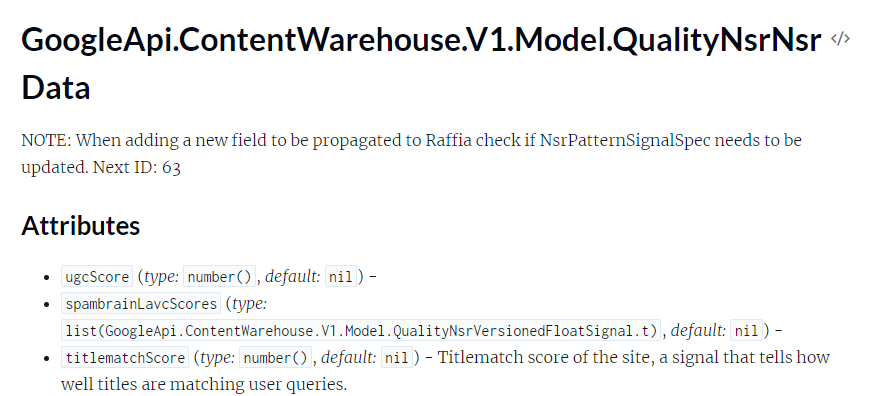

The documentation reveals the existence of a “titlematchScore”. This indicates that Google still places significant value on how well a page title matches the search query.

Thus, placing your target keywords at the beginning of the page title is still a good idea.

Gary Ilyes has mentioned that the concept of an optimal character count for metadata is a misconception created by SEOs. The dataset does not include any metrics that count the length of page titles or snippets. The only character count measure found in the documentation is “snippetPrefixCharCount”, which determines what can be used as part of the snippet.

This bolsters our understanding that while lengthy page titles might not be ideal for driving clicks, they can still positively impact rankings.

10. Travel Sites

The leaked documents suggest that Google might use a whitelist for travel sites, aiming for high-quality results in this sector. This could extend beyond the “Travel” search tab to broader web searches. Additionally, flags like “isCovidLocalAuthority” and “isElectionAuthority” indicate that Google whitelists certain domains for sensitive queries, such as health information and elections.

For instance, after the 2020 US Presidential election, misinformation and calls for violence necessitated reliable search results to prevent further conflict. These whitelists help ensure accurate and responsible information is prioritised, supporting social stability and informed decision-making.

11. Hotel Sites <> Rating Markup

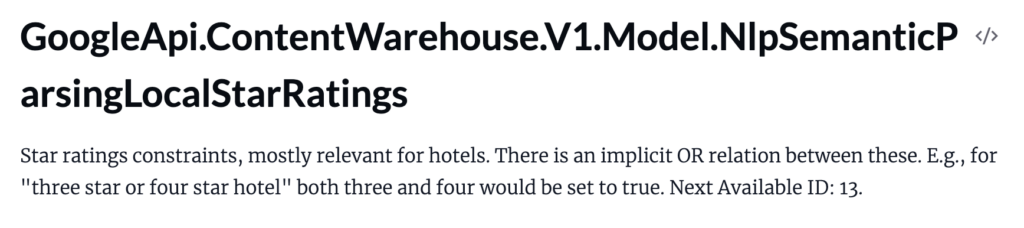

The leaked documentation also outlines attributes related to star ratings, particularly relevant for hotel listings. Each star level (one to five, including half-star increments) is represented by a boolean attribute.

The model also includes fields for “orFewer” and “orMore” to indicate a range of star ratings. These attributes help Google understand and categorise the quality ratings of hotels in search results.

For SEO professionals, understanding how Google uses star ratings can enhance local SEO strategies. Accurately reflecting star ratings on your website and structured data can improve visibility in search results, particularly for hotel listings. Ensuring consistent reviews and proper schema markup for star ratings can help optimise search performance and user trust.

12. Image Search

In the leaked documents, there is a “mediaOrPeopleEntities” attribute that identifies the five most relevant entities (such as people or media) annotated with specific Knowledge Graph collections.

The information helps Google’s Image Search detect when search results predominantly feature a single person or media entity. This capability ensures that image search results are diverse and representative of different relevant entities, enhancing user experience by avoiding over-concentration on a single entity.

In terms of SEO, you can focus on:

- Diverse Content: Ensure your images are well-tagged with a variety of relevant entities to enhance their visibility in search results.

- Knowledge Graph Optimisation: Link your content to appropriate Knowledge Graph entities to improve topical relevance.

- Avoid Over-Concentration: Provide diverse and varied media to prevent over-concentration on a single entity.

This approach can help in achieving better visibility and user engagement in Google’s Image Search.

13. Website Topicality Score

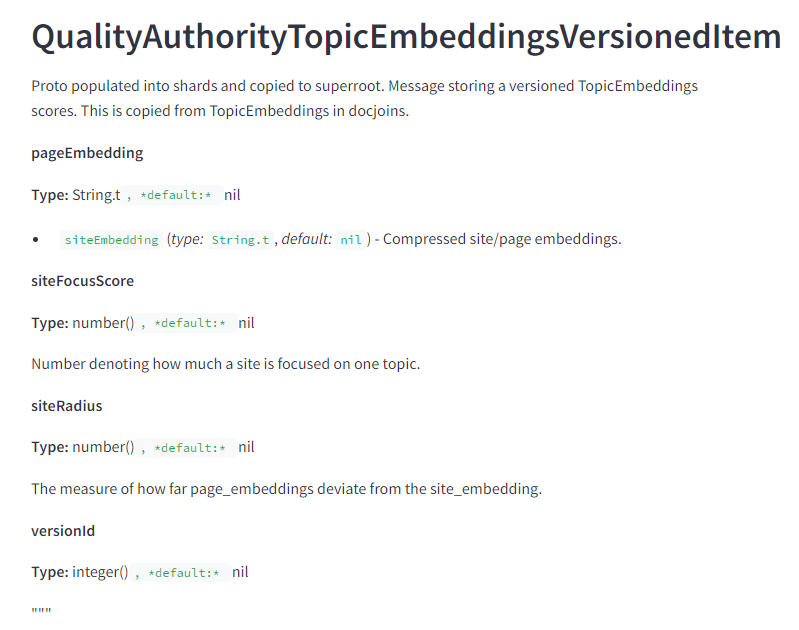

Google seems to be vectorizing pages and websites and comparing the page embeddings and content to the site embeddings to see how off-topic the page is.

The “siteFocusScore” considers how well a site sticks to a single topic. The “siteRadius” captures how much a page deviates from the core topic based on the site2vec vectors generated for the site.

Put simply, the score measures a site’s relevance to specific topics. High topicality scores — aka topical authority — can boost a site’s rankings for queries related to its primary topics.

Sites with content deeply focused on specific topics should be scored higher for those topics. So, focus your strategy on developing comprehensive content around specific topics to establish authority. Use relevant keywords naturally within the content to signal topic relevance. Also, create a strong internal linking structure to connect related content and reinforce topical focus.

14. Authors

It came to light that Google stores author information explicitly, highlighting the significance of authorship in rankings.

As noted in Mike’s article, the leaked documents suggest that Google identifies authors and treats them as entities within its system. So, building an online presence and influence as a quality author may lead to ranking benefits.

However, the exact impact of “E-E-A-T” (Experience, Expertise, Authoritativeness, Trustworthiness) on rankings remains debatable. There is concern that E-E-A-T may be more marketing than substance, as many high-ranking brands lack significant experience or trustworthiness.

15. Byline Date

Content date matters, too. Google wants to deliver fresh content and the documents emphasise the importance of associating dates (“bylineDate”, “syntacticDate”, and “semanticDate”) with pages.

Put simply, specify a date and be consistent with it across structured data, page titles, and XML sitemaps.

16. Panda

The Panda algorithm, detailed in the leaked Google documents, targets low-quality content to ensure users receive valuable and relevant information. Panda assesses websites for issues like thin content, duplicate content, and overall content quality, and it can impact entire sites rather than just individual pages.

Panda uses a variety of quality signals stored in Google’s databases to evaluate and rank content. These signals include user engagement metrics and other quality indicators. User behaviour data, such as long clicks and bounce rates, are significant factors. High bounce rates can indicate low-quality content, while long clicks suggest valuable content. If a website has a significant amount of low-quality content, the entire site can be affected, leading to a demotion in overall search rankings.

To tackle this, focus on creating comprehensive, original, and valuable content that meets user needs. Regularly audit your site for thin or duplicate content and either enhance or remove such pages. Focus on driving more successful clicks using a broader set of queries and creating more link diversity. This would also help recover from the Helpful Content Update if your site was impacted.

17. Geo Location

Based on the documents, Google attempts to associate pages with a location and rank them accordingly. Thus, geographic location influences search results, with local SEO practices helping sites rank better in specific regions. Local relevance and proximity are key factors for local search rankings.

NavBoost geo-fences click data, considering country and state/province levels, alongside mobile versus desktop usage. But if Google lacks data for certain regions or user-agents, it may apply the process universally to the query results.

Put simply, Google prioritises local businesses and content that are geographically relevant to the user’s location. Search results are tailored to include businesses, services, and information closest to the user’s current location. Pages with content that specifically addresses local needs or interests are favoured in rankings.

In SEO terms, incorporate local keywords and phrases relevant to your target audience. Create content that addresses local issues, events, and interests to engage your regional audience. Obtain citations and backlinks from local directories and websites to boost your local authority.

18. Demotions

Certain practices or content types can lead to ranking demotions. We talked about demotion due to Panda. Here are some more algorithmic demotions you need to consider for your future SEO strategy:

- Anchor Mismatch: Links that don’t match their target site are demoted for lack of relevance.

- SERP Demotion: Pages demoted based on negative signals from search results, possibly due to low clicks.

- Nav Demotion: Poor navigation or user experience can lead to demotion.

- Exact Match Domains Demotion: Reduced value for exact match domains since 2012.

- Product Review Demotion: Likely linked to recent updates targeting low-quality reviews.

- Location Demotions: Global pages may be demoted in favour of location-specific relevance.

- Porn Demotions: Obvious category-specific demotions.

- Other Link Demotions: Additional link-based penalties.

Overall, these demotions highlight the importance of creating high-quality content, ensuring a positive user experience, and adhering to ethical SEO practices.

Google Search API Leak Tools

Following the recent leak of Google Search API documentation, we have curated a comprehensive selection of tools to help you navigate and analyse this extensive dataset. These tools are designed to assist you in extracting valuable insights and identifying the most crucial aspects that matter to you.

By using these, you can go through the massive amount of data with ease and focus on what’s truly important. Whether you are looking to enhance your SEO strategy, understand user behaviour, or simply stay informed about the latest trends, these tools will provide you with the necessary capabilities to do so.

The leaked Google Search API documents provide invaluable insights into the complex algorithms driving search rankings. Key factors such as site authority, click data, NavBoost, the sandbox effect, domain age, Chrome data, and several demotion signals offer a comprehensive understanding of what influences search visibility.

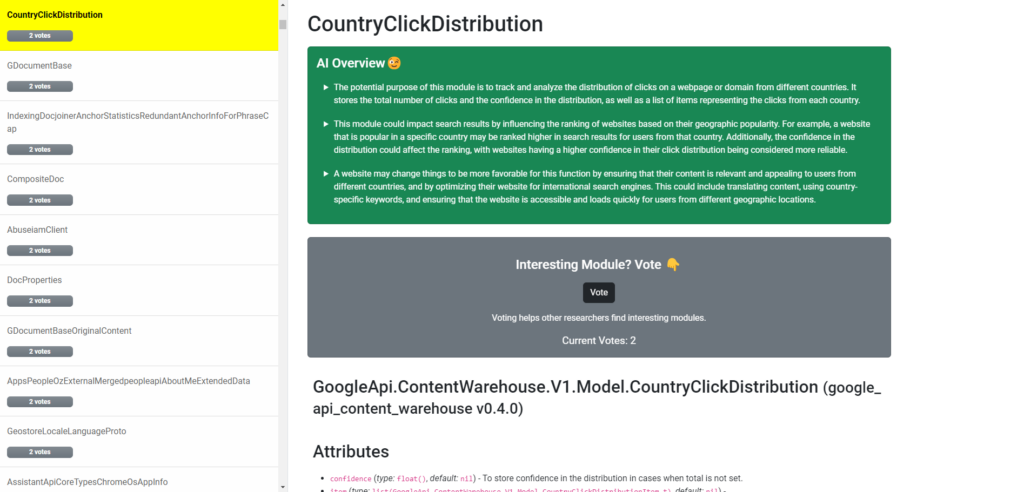

2596.org

2596.org is a website that features a searchable interface and AI-powered overviews for each of the leaked Google Search API documentation modules. This platform is designed to help you dive deep into the specifics of the leak, providing you with detailed insights and a user-friendly experience. Explore the site to uncover the valuable information hidden within each module and make the most of this extensive dataset.

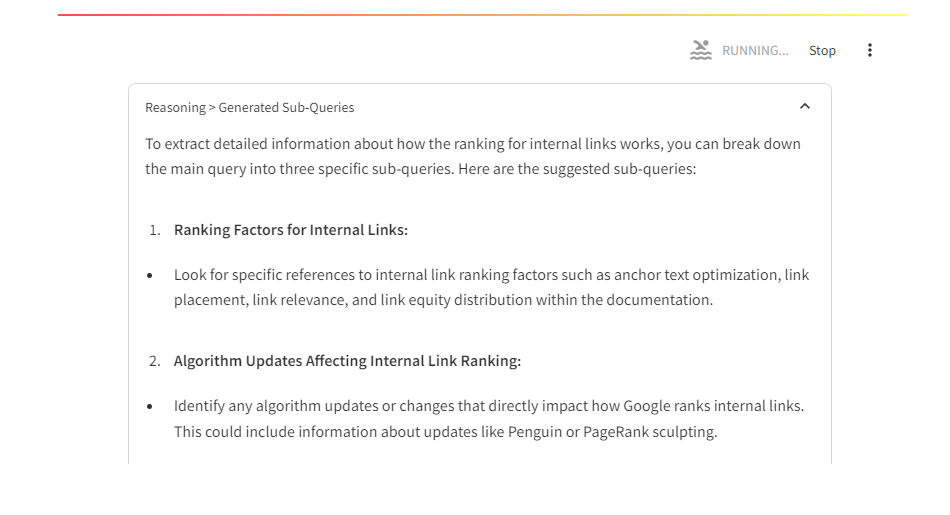

Google Leak Reporting Tool

This tool offers an AI-generated breakdown of the leaked Google Search API documentation. With this tool, you can ask specific questions such as, “Extract all the information about how the ranking for internal links works,” and it will generate a comprehensive report for you. This feature allows you to quickly and efficiently gain insights from the extensive dataset, making it easier to understand and utilise the information.

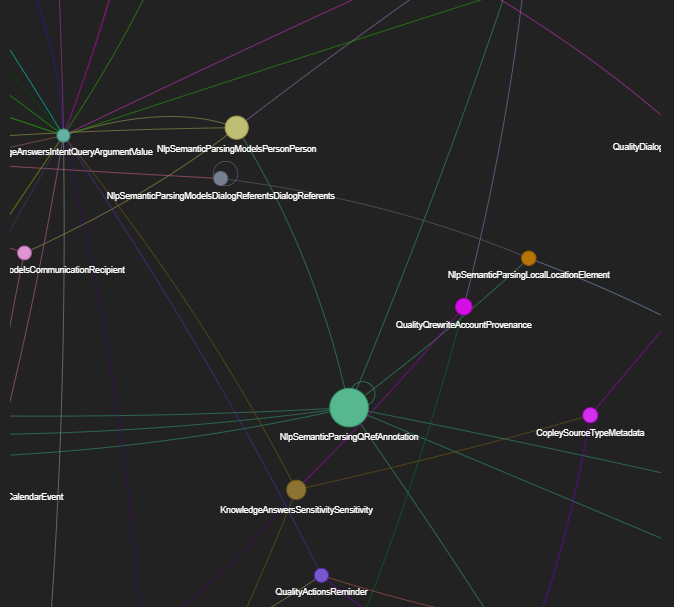

Google’s Ranking Features Modules Relations

Explore a visualisation of Google Search API documentation entries, designed to help you understand the intricate connections and dependencies within the leaked documentation. This visualisation provides a clear and intuitive representation of how different ranking features interact and influence each other.

By using this tool, you can gain deeper insights into the underlying structure of Google’s ranking algorithms. It helps you identify key relationships and patterns that might not be immediately apparent from the raw data.

Conclusion

Come what may, by focusing on high-quality content, ethical SEO practices, and optimising for user experience, you can navigate these factors effectively. Staying informed and adaptive to these insights is your best bet in maintaining and improving search rankings in SEO’s fast-evolving landscape.

Need help in nailing these ranking factors for your website? We have extensive experience in improving and maintaining Google rankings for our clients by taking into account all of these factors and more. Get a proposal today!

DIGITAL MARKETING FOR ALL OF AUSTRALIA

- SEO AgencyMelbourne

- SEO AgencySydney

- SEO AgencyBrisbane

- SEO AgencyAdelaide

- SEO AgencyPerth

- SEO AgencyCanberra

- SEO AgencyHobart

- SEO AgencyDarwin

- SEO AgencyGold Coast

- We work with all businesses across Australia